DMI – Graduate Course in Computer Science

Copyleft

![]() 2018 Giuseppe Scollo

2018 Giuseppe Scollo

outline:

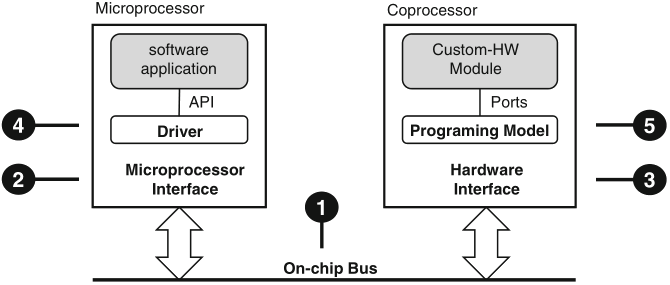

Schaumont, Figure 9.1 - The hardware/software interface

Figure 9.1 presents a synopsis of the elements in a HW/SW interface

the function of the HW/SW interface is to connect the software application to the custom-hardware module; this objective involves five elements:

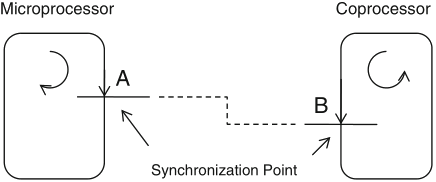

Schaumont, Figure 9.2 - Synchronization point

synchronization: the structured interaction of two otherwise independent and parallel entities

synchronization is needed to support communication between parallel subsystems: every talker needs to have a listener to be heard

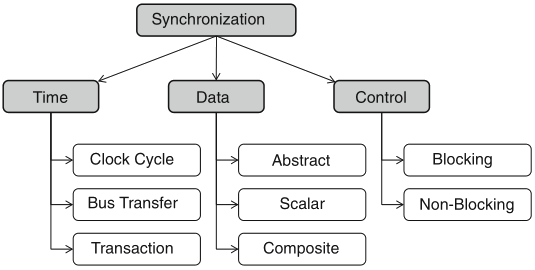

Schaumont, Figure 9.3 - Dimensions of the synchronization problem

three orthogonal dimensions of the synchronization problem:

semaphore: a synchronization primitive S to control access over an abstract, shared resource, by operations:

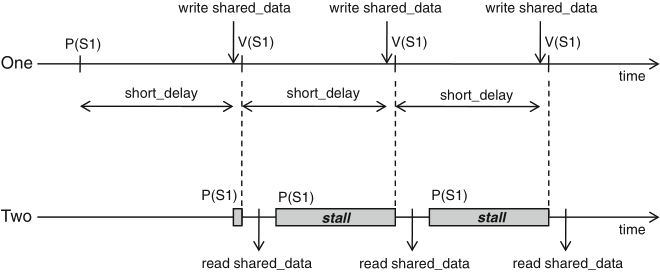

Schaumont, Figure 9.4 - Synchronization with a single semaphore

int shared_data;

semaphore S1;

entity one {

P(S1);

while (1) {

short_delay();

shared_data = ...;

V(S1);

// synchronization point

}

}

entity two {

short_delay();

while (1) {

P(S1);

// synchronization point

received_data = shared_data;

}

}

Schaumont, Listing 9.1 - One-way synchronization with a semaphore

synchronization points: when entity one calls V(S1), so unlocking the stalled entity two

just assume the opposite, viz. move the short_delay() function call from the while-loop in entity one to the while-loop in entity two ...

the situation of unknown delays can be addressed with a two-semaphore scheme

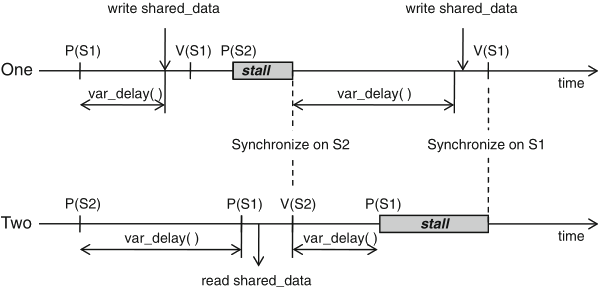

Schaumont, Figure 9.5 - Synchronization with two semaphores

int shared_data;

semaphore S1, S2;

entity one {

P(S1);

while (1) {

variable_delay();

shared_data = ...;

V(S1); // synchronization point 1

P(S2); // synchronization point 2

}

}

entity two {

P(S2);

while (1) {

variable_delay();

P(S1); // synchronization point 1

received_data = shared_data;

V(S2); // synchronization point 2

}

}

Schaumont, Listing 9.2 - Two-way synchronization with two semaphores

figure 9.5 illustrates the case where:

in parallel systems, a centralized semaphore may not be feasible; a common alternative is

if a sender or receiver arrives too early at a synchronization point, should it wait idle until the proper condition comes along, or should it go off and do something else?

both of the semaphore and handshake schemes discussed earlier implement a blocking data-transfer

computational speedup is often the motivation for the design of custom hardware

communication constraints need to be evaluated as well!

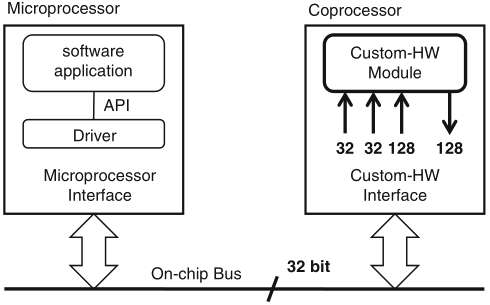

Schaumont, Figure 9.8 - Communication constraints of a coprocessor

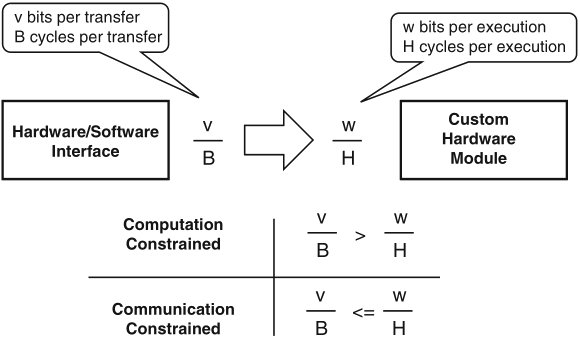

Schaumont, Figure 9.9 - Communication-constrained system vs. computation-constrained system

the number of clock cycles needed per execution of the custom hardware module is related to its hardware sharing factor (HSF) =def number of available clock cycles in between each I/O event

| Architecture | HSF |

| Systolic array processor | 1 |

| Bit-parallel processor | 1–10 |

| Bit-serial processor | 10–100 |

| Micro-coded processor | >100 |

Schaumont, Table 9.1 - Hardware sharing factor

coupling indicates the level of interaction between execution flows in software and custom hardware

coupling relates synchronization with performance

| Coprocessor | Memory-mapped | |

| Factor | interface | interface |

| Addressing | Processor-specific | On-chip bus address |

| Connection | Point-to-point | Shared |

| Latency | Fixed | Variable |

| Throughput | Higher | Lower |

Schaumont, Table 9.2 - Comparing a coprocessor interface with a memory-mapped interface

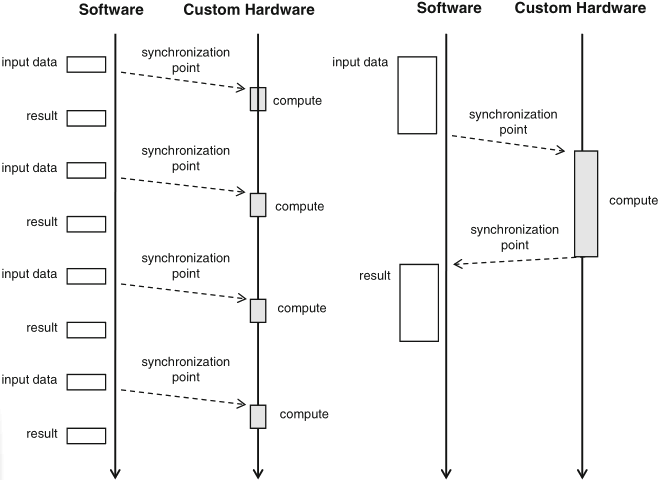

Schaumont, Figure 9.10 - Tight coupling versus loose coupling

example: difference between

N.B.: a high degree of parallelism in the overall design may be easier to achieve with a loosely-coupled scheme than with a tightly-coupled scheme

four families of on-chip bus standards, among the most widely used ones:

two main classes of bus configurations: shared and point-to-point

a generic shared bus and a point-to-point one are considered next, abstracting common features of all of them

| Bus |

High-performance shared bus |

Periferal shared bus |

Point-to-point bus |

| AMBA v3 | AHB | APB | |

| AMBA v4 | AXI4 | AXI4-lite | AXI4-stream |

| CoreConnect | PLB | OPB | |

| Wishbone | Crossbar topology | Shared topology | Point to point topology |

| Avalon | Avalon-MM | Avalon-MM | Avalon-ST |

Schaumont, Table 10.1 - Bus configurations for existing bus standards

| Legenda | |

| AHB | AMBA highspeed bus |

| APB | AMBA peripheral bus |

| AXI | advanced extensible interface |

| PLB | processor local bus |

| OPB | onchip peripheral bus |

| MM | memory-mapped |

| ST | streaming |

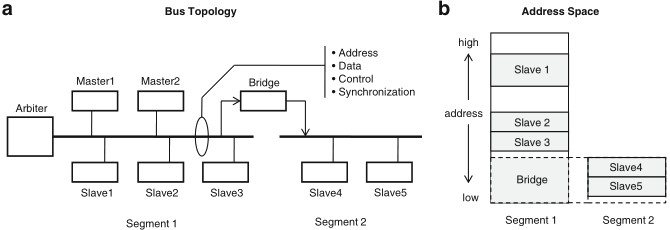

a shared bus on-chip typically consists of a few segments, connected by bridges; every transaction is initiated by a bus master, to which a slave responds; if they are on different segments, then the bridge acts as a slave on one side and as a master on the other side, while performing address translation

four classes of bus signals:

Schaumont, Figure 10.1

- (a) Example of a multi-master segmented bus system.

(b) Address space for the same bus

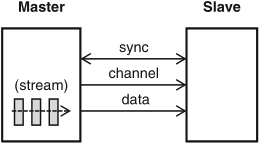

Schaumont, Figure 10.2 - Point-to-point bus

a point-to-point bus is a dedicated physical connection between a master and a slave, for unlimited stream data transfer

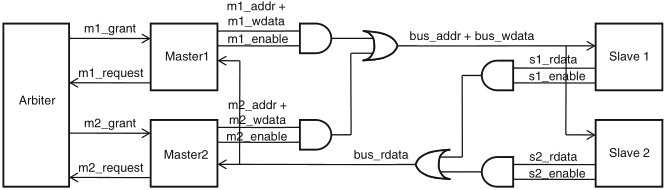

figure 10.3 shows the physical layout of a typical on-chip bus segment with two masters and two slaves, where AND and OR gates in the center of the diagram serve as multiplexers, of both address and data lines

Schaumont, Figure 10.3 - Physical interconnection of a bus. The *_addr, *_wdata, *_sdata signals are signal vectors. The *_enable, *_grant, *_request signals are single-bit signals

signal naming convention about read/write data:

bus arbitration ensures that only one component may drive any given bus line at any time

naming conventions help one to infer functionality and connectivity of wires based on their names

a component pin name will reflect the functionality of that pin; bus signals, which are created by interconnecting component pins, follow a convention, too, in order to avoid confusion between similar signals

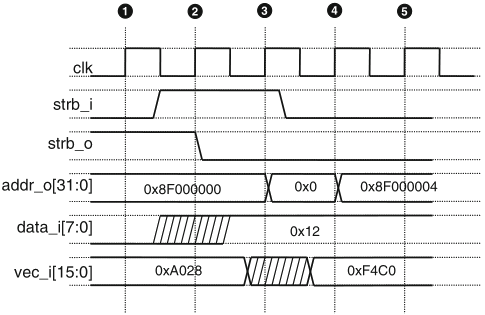

because of the inherently parallel nature of a bus system, timing diagrams are extensively used to describe the timing relationships of bus signals

Schaumont, Figure 10.4 - Bus timing diagram notation

the diagram in figure 10.4 shows the notation to describe the activities in a generic bus over five clock cycles

bus timing diagrams are very useful to describe the activities on a bus as a function of time

table 10.2 lists the signals that make up a generic bus, abstracting from any specific system

| Signal name | Meaning |

| clk | Clock signal. All other bus signals are references to the upgoing clock edge | m_addr | Master address bus | m_data | Data bus from master to slave (write operation) | s_data | Data bus from slave to master (read operation) | m_rnw | Read-not-Write. Control line to distinguish read from write operations | m_sel | Master select signal, indicates that this master takes control of the bus | s_ack | Slave acknowledge signal, indicates transfer completion | m_addr_valid | Used in place of m_sel in split-transfers | s_addr_ack | Used for the address in place of s_ack in split-transfers | s_wr_ack | Used for the write-data in place of s_ack in split-transfers | s_rd_ack | Used for the read-data in place of s_ack in split-transfers | m_burst | Indicates the burst type of the current transfer | m_lock | Indicates that the bus is locked for the current transfer | m_req | Requests bus access to the bus arbiter | m_grant | Indicates bus access is granted |

Schaumont, Table 10.2 - Signals on the generic bus

table 10.3 shows the correspondence of some of the generic bus signals to equivalent signals of the CoreConnect/OPB, AMBA/APB, Avalon-MM, and Wishbone busses

| generic | CoreConnect/OPB | AMBA/APB | Avalon-MM | Wishbone |

| clk | OPB_CLK | PCLK | clk | CLK_I (master/slave) |

| m_addr | Mn_ABUS | PADDR | Mn_address | ADDR_O (master) |

| ADDR_I (slave) | ||||

| m_rnw | Mn_RNW | PWRITE | Mn_write_n | WE_O (master) |

| m_sel | Mn_Select | PSEL | STB_O (master) | |

| m_data | OPB_DBUS | PWDATA | Mn_writedata | DAT_O (master) |

| DAT_I (slave) | ||||

| s_data | OPB_DBUS | PRDATA | Mb_readdata | DAT_I (master) |

| DAT_O (slave) | ||||

| s_ack | Sl_XferAck | PREADY | Sl_waitrequest | ACK_O (slave) |

Schaumont, Table 10.3 - Bus signals for simple read/write on Coreconnect/OPB, ARM/APB, Avalon-MM and Wishbone busses

recommended readings:

readings for further consultation: