DMI – Graduate Course in Computer Science

Copyleft

![]() 2018 Giuseppe Scollo

2018 Giuseppe Scollo

outline:

key professional challenge in hardware-software codesign:

hardware and software are the dual of one another in many respects

here is a comparative synopsis of their fundamental differences (Schaumont, Table 1.1)

| Hardware | Software | |

| Design Paradigm | Decomposition in space | Decomposition in time |

| Resource cost | Area (# of gates) | Time (# of instructions) |

| Flexibility | Must be designed-in | Implicit |

| Parallelism | Implicit | Must be designed-in |

| Modeling | Model ≠ implementation | Model ∼ implementation |

| Reuse | Uncommon | Common |

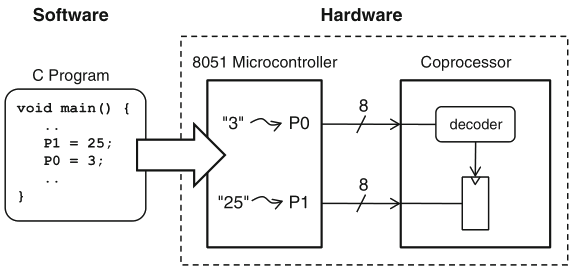

a simple example highlights the variety of models which come into play in hardware-software codesign:

Schaumont, Fig. 1.3 - A codesign model

the details of the formalization of this example in Gezel are omitted

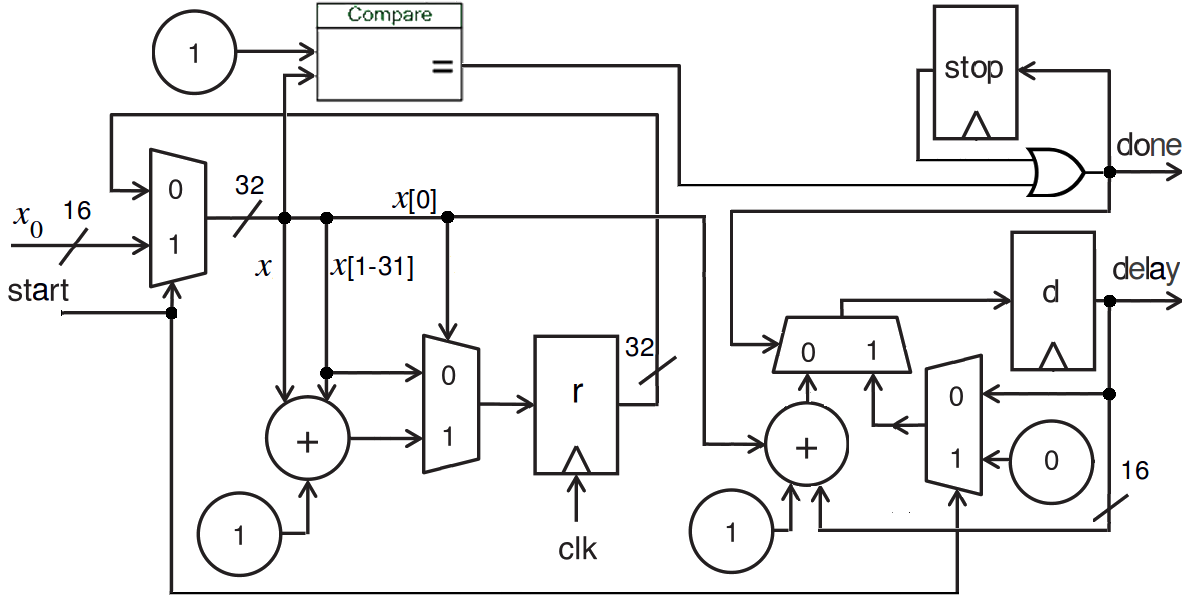

the hardware datapath presented in the first lecture could hardly serve as a coprocessor to accelerate the visualization of a Collatz trajectory

however, it may be embedded in a coprocessor that is designed to accelerate the computation of functions on a Collatz trajectory

to this purpose a redefinition of the circuit interface is needed, as well as its extension with some control logic, e.g. to stop the computation and output the result upon the first '1' occurrence in the trajectory

an extension of the circuit seen in the first lecture that does not output the trajectory, rather its delay:

Hardware datapath for the delay of a Collatz trajectory

Gezel representation:

dp delay_collatz (

in start : ns(1) ;

in x0 : ns(16) ;

out done : ns(1) ; out delay : ns(16))

{

reg r : ns(32) ;

reg d : ns(16) ;

reg stop : ns(1) ;

sig x : ns(32) ;

always {

x = start ? x0 : r ;

r = x[0] ? x + (x >> 1) + 1 : x >> 1 ;

done = ( x == 1 ) | stop ;

stop = done ;

d = done ? ( start ? 0 : d ) : d + 1 + x[0] ;

delay = d ;

} }

the interface of the datapath just seen suggests an easy implementation of the coprocessor as a memory-mapped I/O device, for example equipped with:

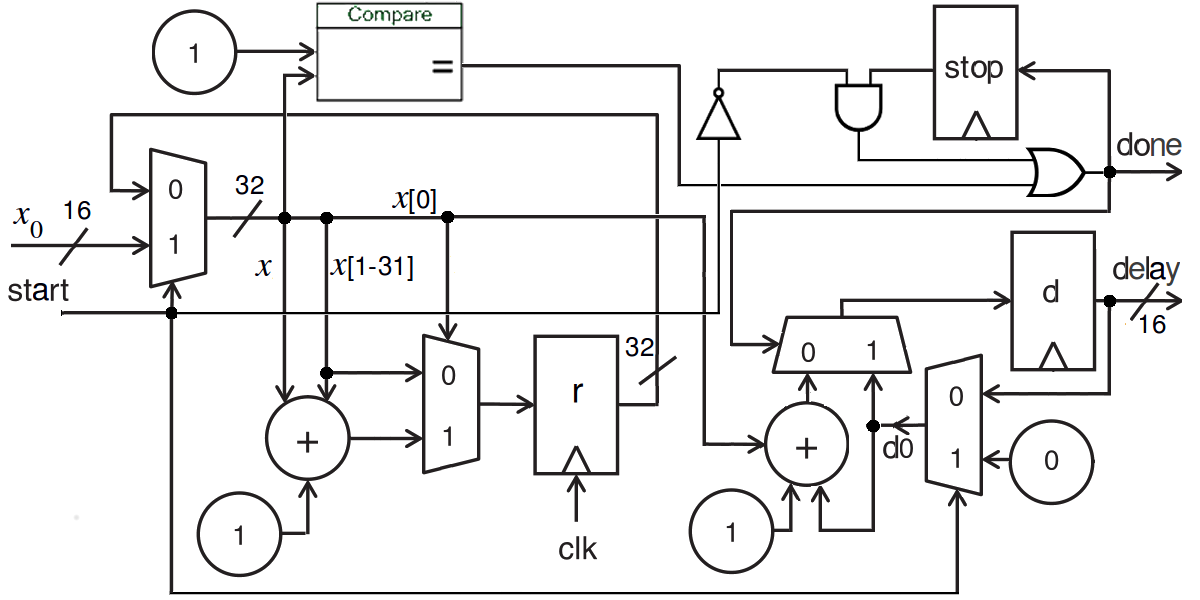

but... is the aforementioned datapath adequate to perform the required computation for subsequent interactions with the software?

revised circuit for the delay of Collatz trajectories:

Hardware datapath for the delay of Collatz trajectories

Gezel representation:

dp delay_collatz_rev (

in start : ns(1) ;

in x0 : ns(16) ;

out done : ns(1) ; out delay : ns(16))

{

reg r : ns(32) ;

reg d : ns(16) ;

reg stop : ns(1) ;

sig x : ns(32) ;

sig d0, dd : ns(16) ;

always {

x = start ? x0 : r ;

r = x[0] ? x + (x >> 1) + 1 : x >> 1 ;

done = ( x == 1 ) | ( stop & ~start ) ;

stop = done ;

dd = 1 + x[0] ;

d0 = start ? 0 : d ;

d = done ? d0 : d0 + dd ;

delay = d ;

} }

concurrency and parallelism are not synonyms:

concurrency is a feature of the application,

parallelism is a feature of its implementation, that requires:

Amdahl's law sets at 1/s the maximum speed-up that may be achieved by parallel execution of an application that has a fraction s of sequential execution

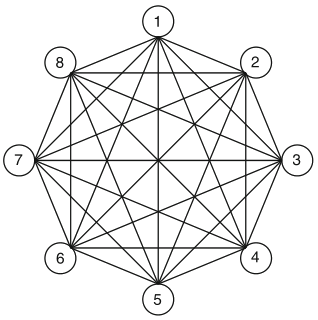

Schaumont, Fig. 1.9 - Eight node connection machine

is it difficult to devise concurrent algorithms for parallel architectures?

for example, consider the sum of n numbers on the CM, say with n = 8, by assegning one of the summands to each processor initially

recommended readings:

for further consultation: